A/B testing, also known as split testing, is a method of comparing two versions of a web page, email, or other digital asset to determine which one performs better.

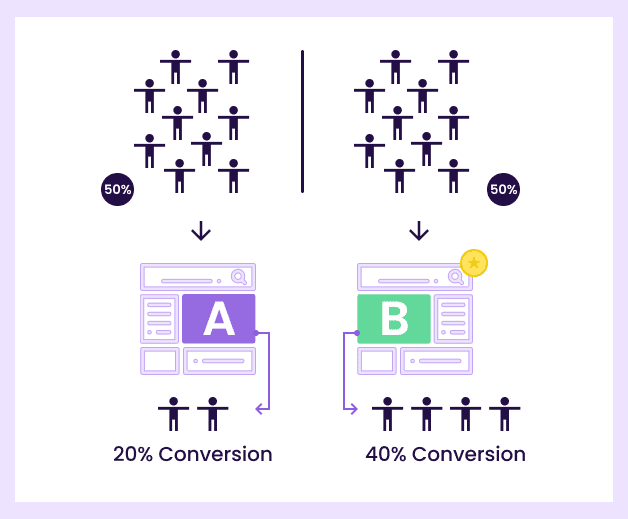

In an A/B test, a random subset of users is presented with one version of the asset (version A), while another random subset is presented with a slightly different version (version B). The performance of each version is then measured and compared to determine which version is more effective in achieving a desired outcome, such as increased clicks, conversions, or engagement.

As part of conversion rate optimization, A/B testing can be used for a variety of purposes, including improving website design, optimizing email campaigns, testing product features, and more. It is a popular method among digital marketers, as it provides a way to make data-driven decisions and improve the effectiveness of digital marketing efforts.

How does AB Testing Work?

As mentioned in the previous section, A/B testing is a way to compare two versions of something to see which one works better. For example, if you have a website and you want to know which version of your homepage gets more clicks, you could create two versions of the homepage: version A and version B.

Then, you randomly show version A to some visitors and version B to others. You then measure how each version performs, such as how many clicks each version gets, how long people stay on each version, or how many people complete a certain action on each version.

By comparing the test results, you can see which version performs better. You can then use the better-performing version to improve your website, email, or other digital assets to achieve your desired outcome, such as more clicks or conversions.

How to Do A/B Testing

A/B testing can be used for a variety of purposes, and it’s a valuable tool for making data-driven decisions to improve your digital marketing efforts.

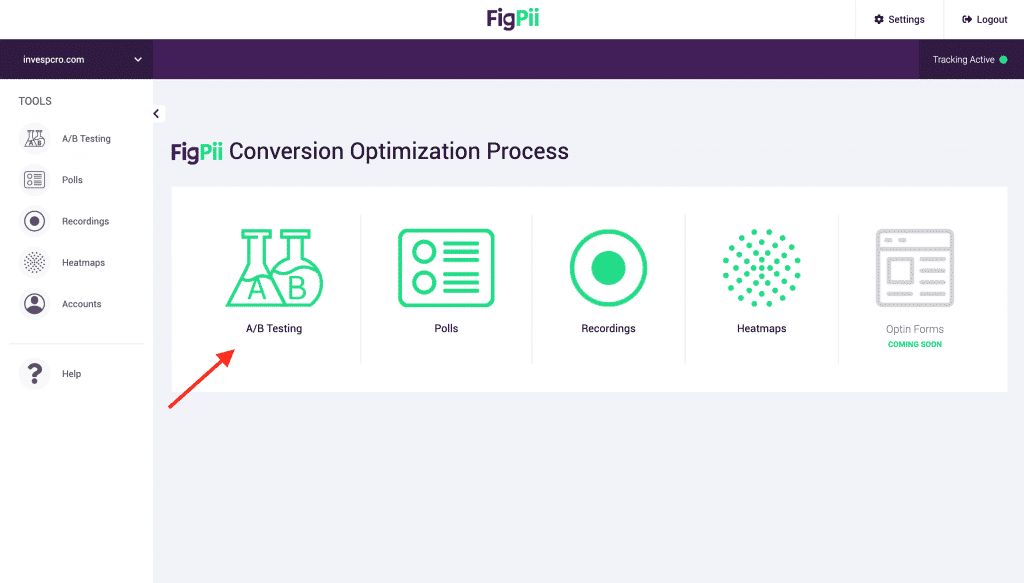

Let me walk you through the process of creating your first A/B test using FigPii.

1. Log in to FigPii.

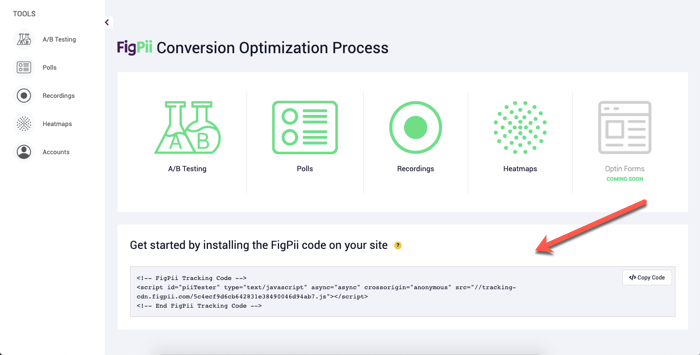

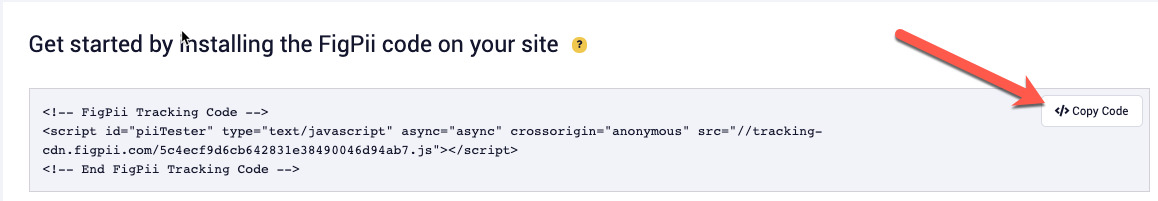

2. The FigPii code will appear at the bottom section of the dashboard.

3. Click on “copy code.

4. Paste the FigPii Tracking Code into your website’s <head> section.

FigPii integrates with major CMS and e-commerce websites; check our full list of integrations right here.

5. Check your FigPii dashboard to verify the installation. When the tracking code is installed on your site, the tracking indicator will show you that the code is active.

Once you have added the FigPii Tracking Code to your site, you must wait about an hour to check if it is installed correctly. This usually happens when your site is loaded with the FigPii tracking code installed. But there can be a delay for up to an hour before it shows as “Active”.

6. log in to FigPii’s dashboard and review the A/B testing section from the side menu.

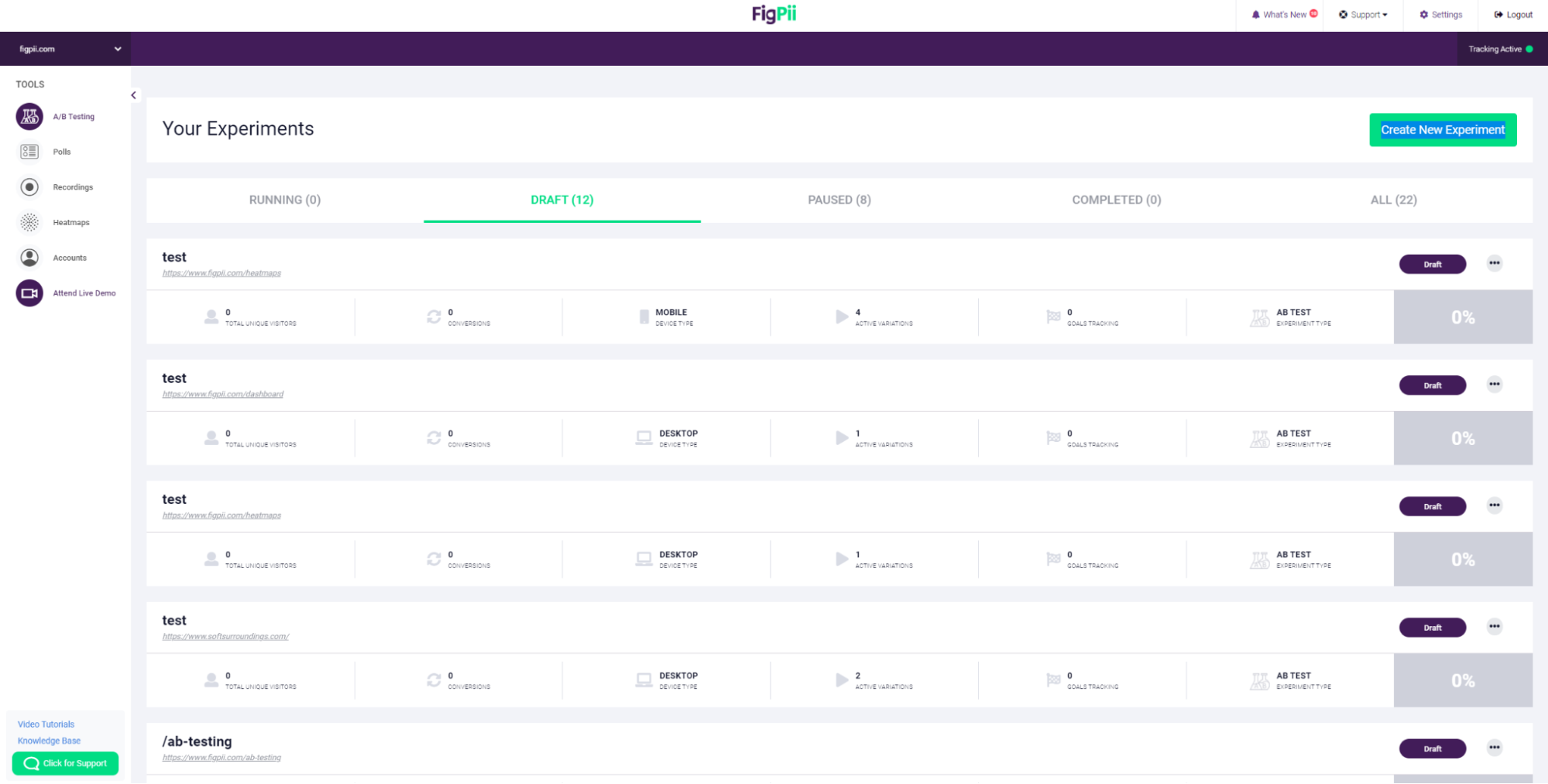

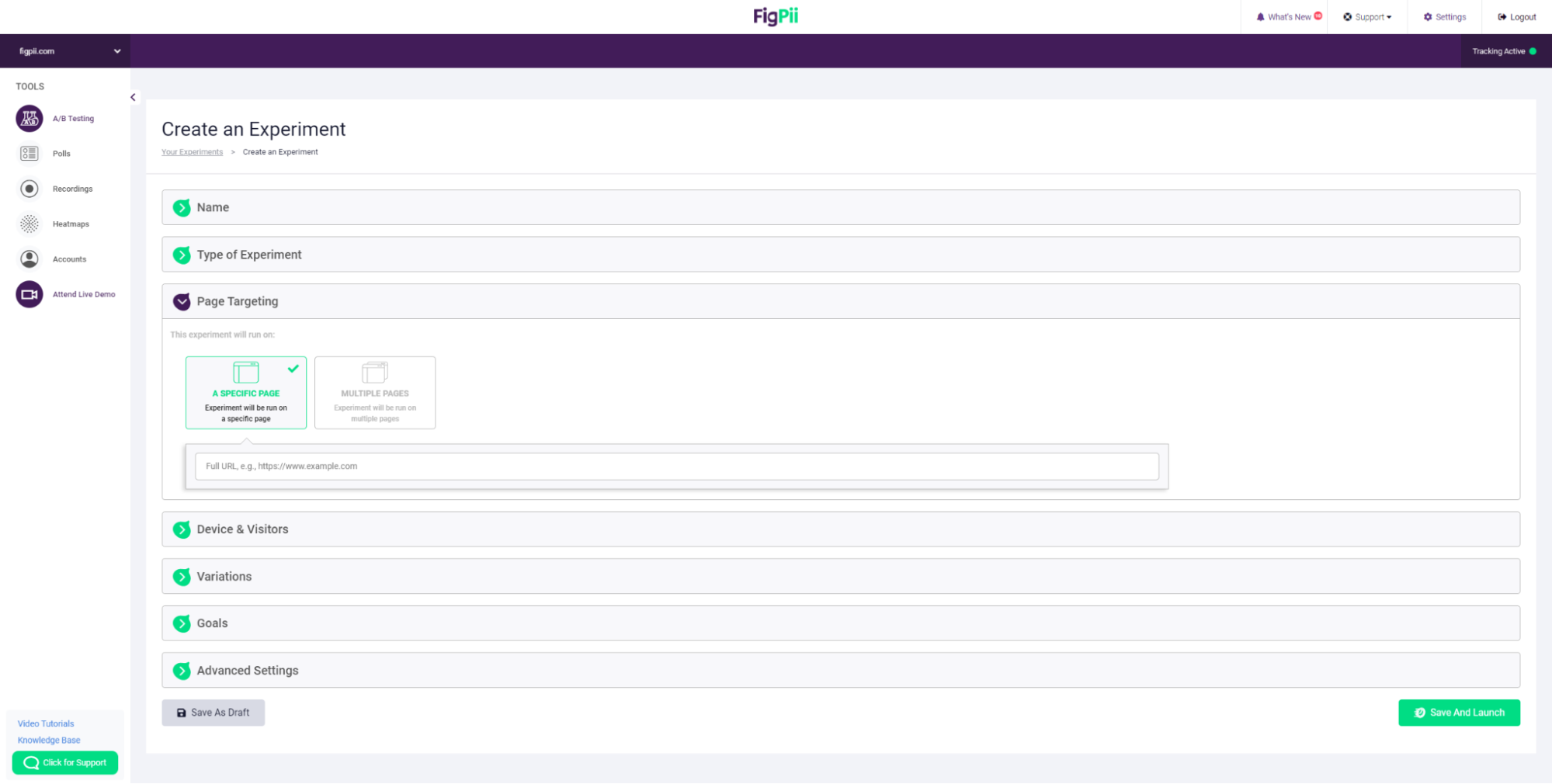

7. Click on Create New Experiment

8. Choose your A/B test name

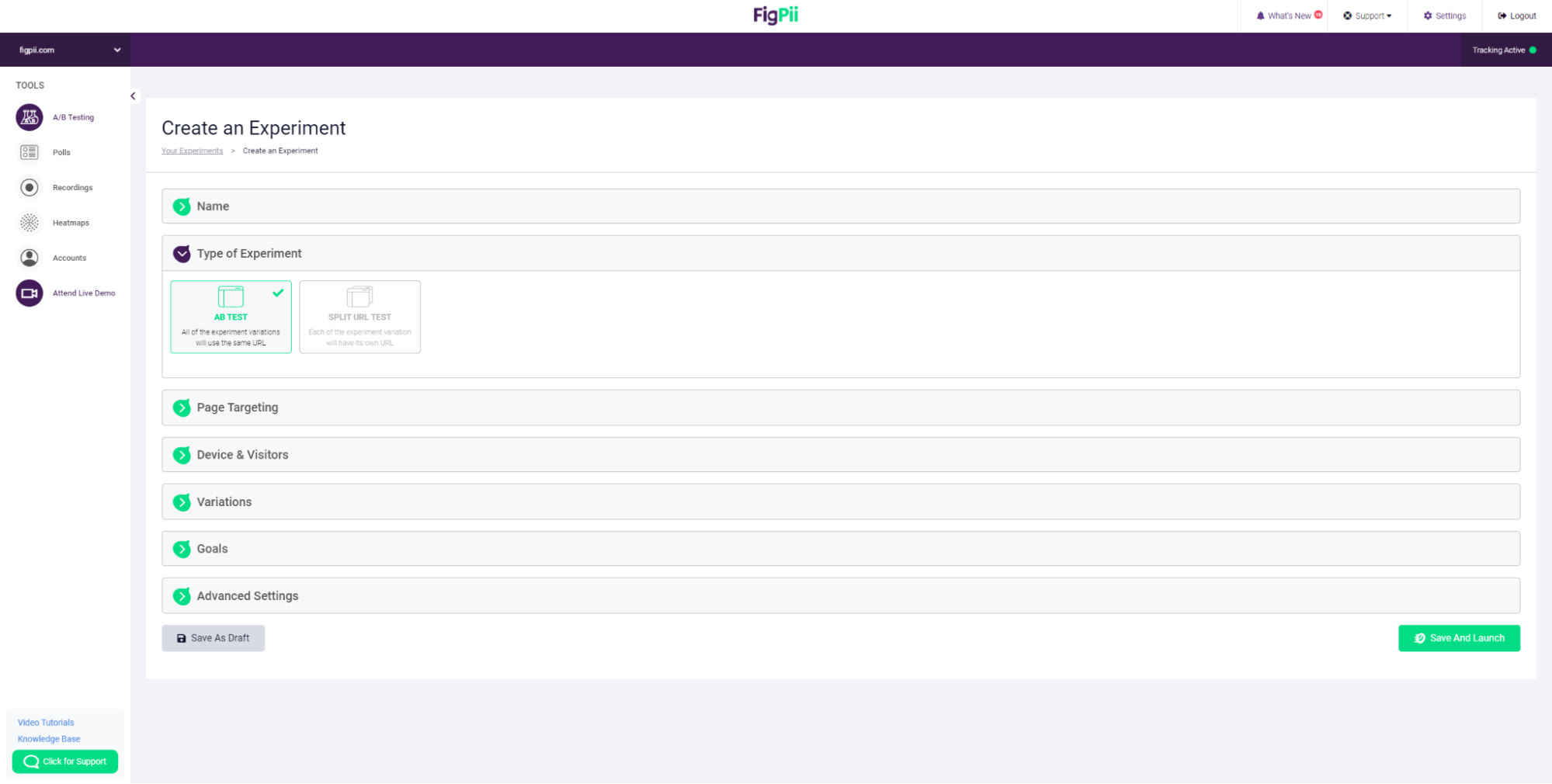

9. Choose whether your experiment is an A/B test

10. Choose whether you want to test just one page or multiple pages

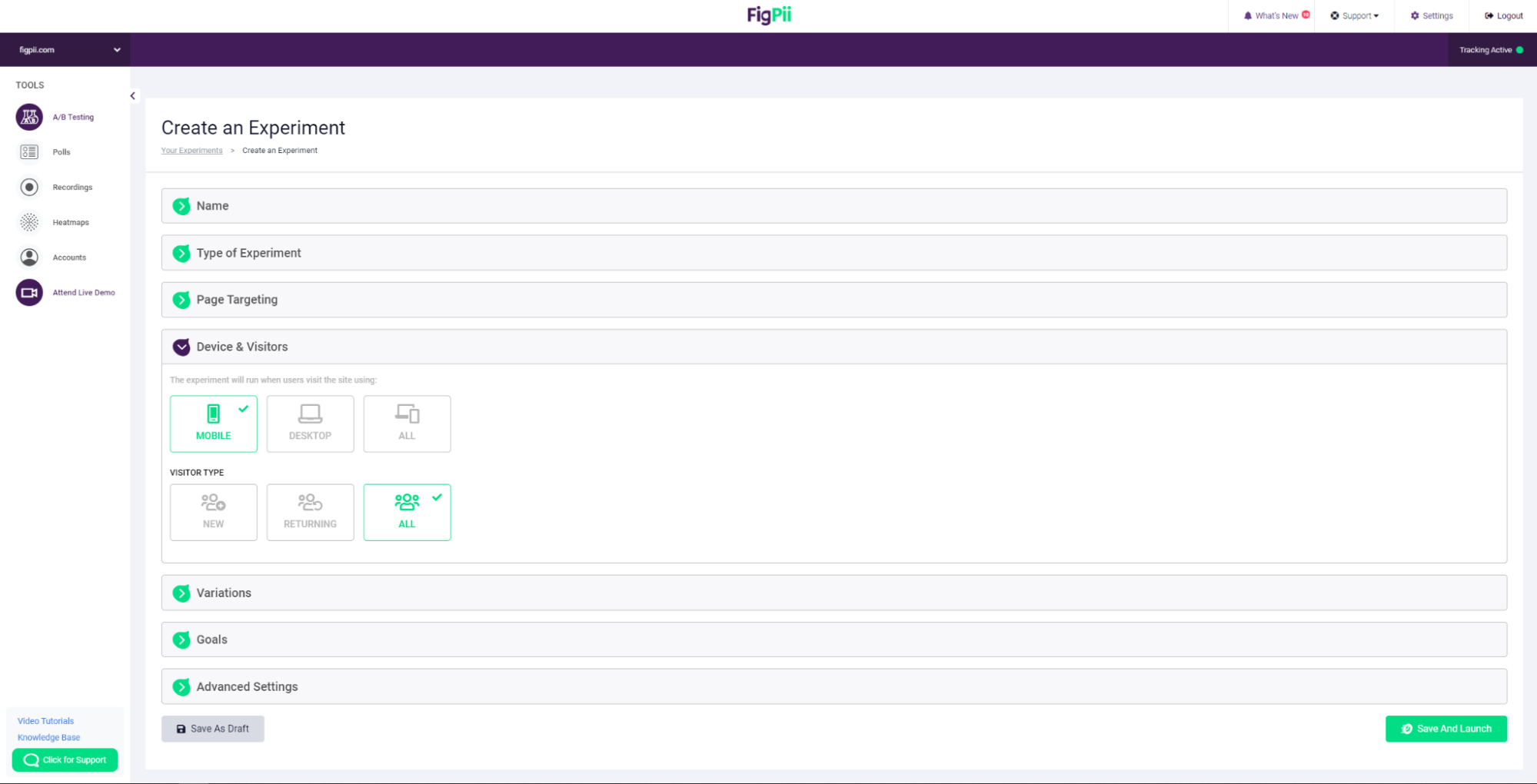

11. You can choose specific parameters to help you design an accurate experiment so you can test things like:

- Which copy converts more visitors to customers?

- Which CTA is getting more clicks

- Did changing these sections make any difference?– And much more

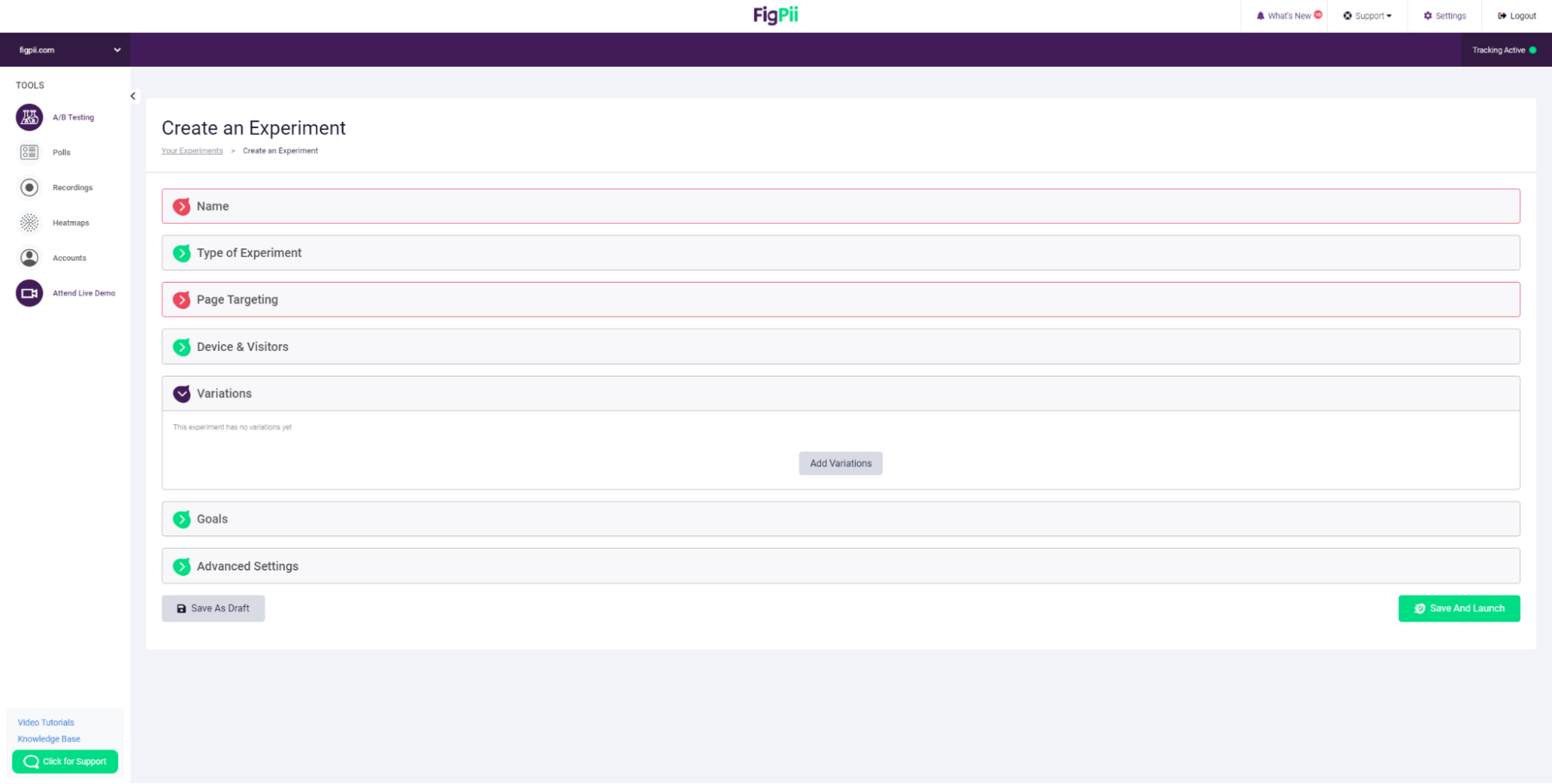

12. Add your first variation, then repeat the step above again to add more variations to the experiment.

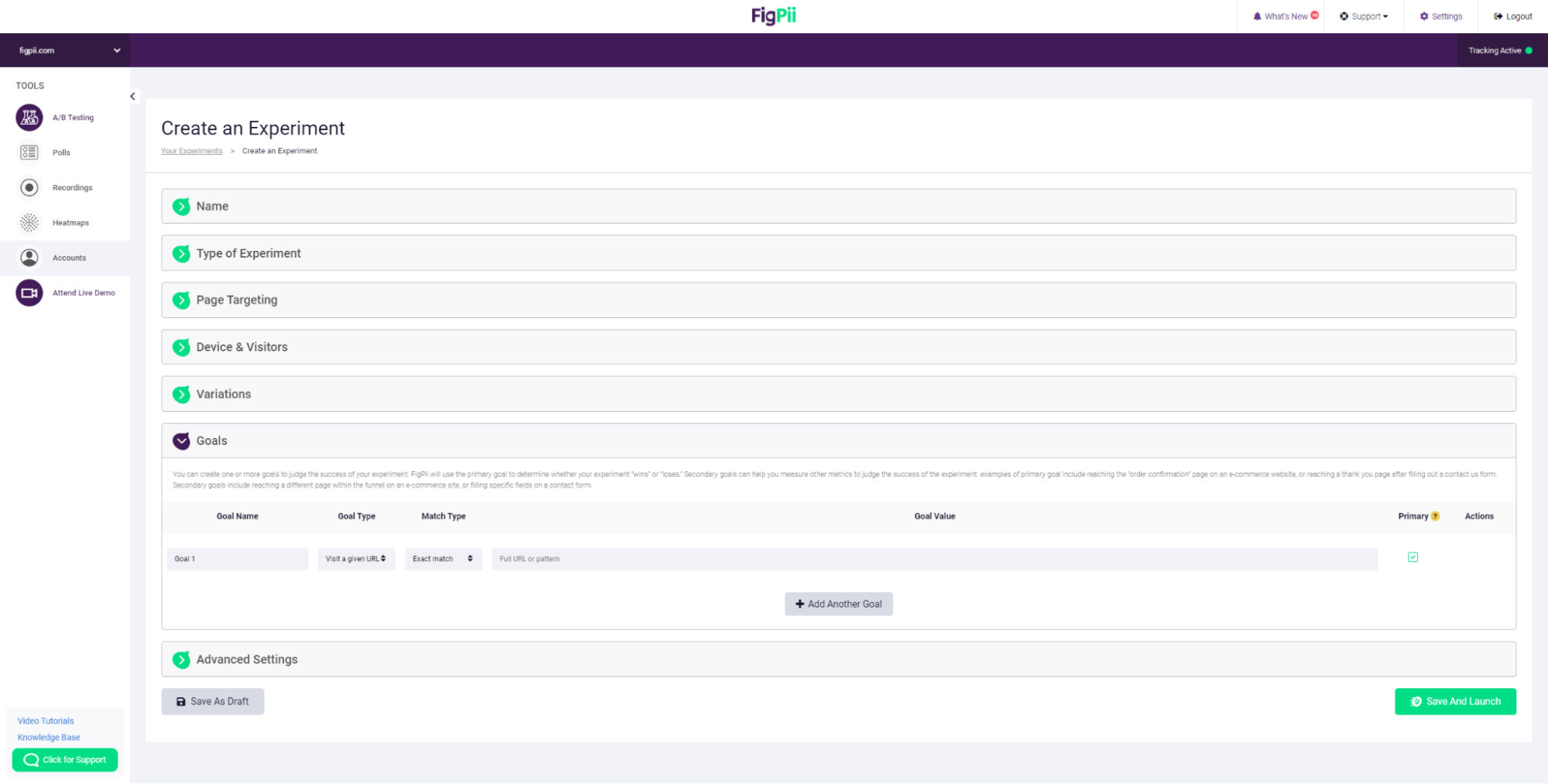

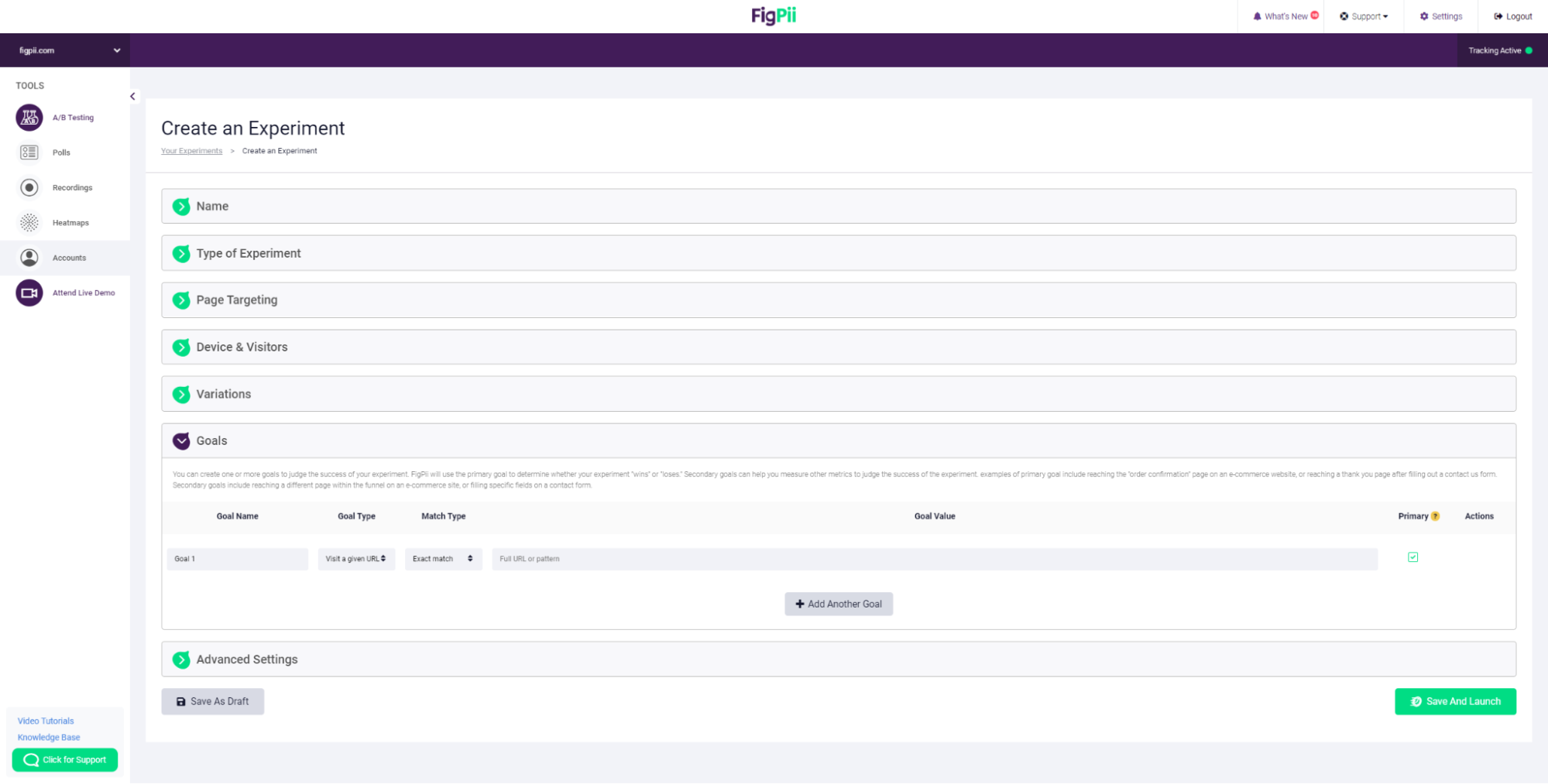

13. Create your goals. You can create one or more goals to judge the success of your experiment.

FigPii will use the primary goal to determine whether your experiment “wins” or “loses.” Secondary goals can help you measure other metrics to judge the success of the experiment.

Examples of primary goals include reaching the “order confirmation” page on an e-commerce website or reaching a thank you page after filling out a contact us form. Secondary goals include reaching a different page within the funnel on an e-commerce site or filling specific fields on a contact form.

14. Refine your experiment using our advanced options to make your experiments more impactful.

And that’s it, and you’re done! You now have an A/B testing experiment. Let it run for a couple of days and then check the results and which of your variants was concluded a winner.

Now you might be starting to think, okay, but how does an A/B test help me achieve more?

Why Is A/B Testing Important?

There is a positive correlation between launching several A/B tests and improving your website conversion rates. Here is why A/B testing is important:

1. Data-driven decision-making

A/B testing allows you to make data-driven decisions by comparing the performance of two or more versions of something, such as a webpage, email, or ad. By measuring the performance of each version, you can determine which version is more effective in achieving your desired outcome, such as increased clicks, conversions, or engagement.

2. Improves user experiences

A/B testing can help you improve the user experience by testing different design or content elements to see which ones resonate better with your target audience. By identifying the elements that work best, you can create a more engaging and user-friendly experience that meets the needs of your audience.

3. Increases conversions

A/B testing can help you increase conversions by testing different variations of your call-to-action (CTA) buttons, forms, or other conversion points. By identifying the variation that performs best, you can optimize your conversion rate and achieve your business goals.

4. Cost-effective

A/B testing is a cost-effective way to improve your digital marketing efforts. By testing different variations of your digital assets, you can make incremental improvements without investing significant resources in a complete redesign or overhaul.

5. Competitive advantage

A/B testing can give you a competitive advantage by helping you stay ahead of the competition. You can continuously test and optimize your digital assets to improve your marketing performance and attract more customers.

6. Decreases bounce rates

A/B testing can help reduce bounce rates by identifying and addressing the factors that cause visitors to leave your website without taking any action. You can improve engagement, reduce bounce rates, and increase conversions by testing different page layouts, optimizing page speed, testing headlines and messaging, and testing offers and promotions.

What Can You A/B Test: 12 A/B Testing Examples

Instead of testing elements such as button sizes, color and font-styles, you should focus on high quality tests that drive conversions. Here are AB testing examples you should launch on your website:

1. Navigation menus

Test different navigation menus to see which ones are more intuitive and user-friendly for your visitors. For example, you could test a dropdown menu versus a hamburger menu to see which one is more effective in helping visitors find what they’re looking for.

2. Social proof

Test the inclusion of social proof elements, such as customer reviews or ratings, to see how they affect conversions. For example, you could test the inclusion of a five-star rating system versus a text-based review system to see which one is more effective in building trust with your visitors.

3. Pricing

Test different pricing strategies to see which ones generate the most revenue. For example, you could test a discount off the regular price versus a bundle deal to see which one is more effective in incentivizing purchases.

4. Content length

Test different content lengths, such as short versus long-form, to see which generates the most engagement or conversions. For example, you could test a short product description versus a longer, more detailed one to see which one is more effective in convincing visitors to purchase.

5. Pop-ups

Test different pop-up designs or timing to see which ones generate the most leads or conversions. For example, you could test a pop-up that appears immediately upon page load versus a pop-up that appears after a visitor has been on the page for a certain amount of time to see which one is more effective in capturing visitor attention.

6. Headlines

Test different headlines to see which ones generate the most clicks or conversions. For example, you could test a straightforward headline versus a more creative or attention-grabbing headline to see which one resonates best with your audience.

7. Images

Test different images to see which ones generate the most engagement or conversions. For example, you could test a product versus a lifestyle photo to see which is more effective in showcasing your product.

8. Call-to-action (CTA) copy

Test different CTA copies to see which ones generate the most clicks or conversions. For example, you could test “Buy Now” versus “Add to Cart” to see which is more effective in getting visitors to act.

9. Forms

Test different form layouts or form fields to see which ones generate the most completions. For example, you could test a long-form versus a short form to see which one is more effective in reducing form abandonment.

10. Landing pages

Test different landing page designs or content to see which ones generate the most conversions. For example, you could test a video landing page versus a text-based landing page to see which one is more effective in engaging your audience.

11. Email subject lines

Test different email subject lines to see which generates the most open or click-through rate. For example, you could test a straightforward subject line versus a more creative or personalized one to see which one is more effective in opening your emails.

12. Form designs

Test different form designs to see which ones generate the most conversions. For example, you could test a single-page checkout form versus a multi-page form to see which one is more effective in reducing form abandonment.

What are the Different Types of A/B testing?

There are different types of A/B tests. Each type has its benefits and drawbacks – this means that the type of testing you choose should depend on your specific goals and circumstances.

Multivariate testing

Multivariate testing is a type of A/B testing that involves testing multiple variations of a webpage or interface with multiple variables changed between them. This type of testing can help you identify the impact of different combinations of changes.

Split testing

Split testing, also known as a split URL test, is a type of A/B testing that involves testing two different web page versions or interfaces with different URLs. This can be useful if you want to test major changes that can’t be made with simple changes to HTML or CSS.

A/B/n testing

A/B/n testing is a type of A/B testing that involves testing more than two variations of a webpage or interface. This can be useful if you want to test more than two variations of a page or if you have multiple ideas for changes to test.

Bandit testing

Banding testing is a type of A/B testing involving machine learning algorithms to dynamically allocate traffic to web page variations or interface variations based on their performance. This can be useful if you have many variations to test or want to optimize the testing process over time.

What do you need to Launch a Successful A/B Test?

If you want to run tests, you will typically need the following:

A testing tool

A variety of A/B testing tools are available, ranging from simple and easy-to-use options to more advanced tools with advanced capabilities. You can use FigPii to launch your A/B test.

Test Hypothesis

You’ll need to identify what you want to test and develop a hypothesis about what you think will happen when you make changes to your website or application. This hypothesis will help guide your testing and ensure that you are testing changes that are relevant to your business goals.

Test variations

You’ll need to create multiple versions of the webpage or application you are testing. Typically, you’ll create at least two variations: the control group (the original version) and the treatment group (the version with changes).

Website Traffic

You’ll need to ensure enough traffic to your website or application to generate meaningful results. You may need to adjust your traffic allocation between the control and treatment groups to ensure your test results are statistically significant.

Tracking and analytics

You’ll need to ensure that you are tracking the relevant metrics to measure the success of your A/B test. This may include conversion rates, bounce rates, time on site, and others.

Time

You’ll need to run your test for a sufficient time to ensure that you have enough data to reach statistical significance. The time required will depend on your sample size and the statistical significance level you aim for.

With these elements in place, you can launch an A/B test and start gathering data to help you make informed decisions about the changes you want to make to your website or application.

How to Calculate A/B Testing Sample Size for Statistically Significant Results

Calculating the appropriate sample size for an A/B test is important to ensure that the test results are statistically significant and can be confidently applied to your entire audience. Here’s a basic formula to calculate the sample size for an A/B test:

Sample size = (Z-score)^2 (standard deviation) (1 – standard deviation) / (margin of error)^2

Where:

- Z-score: The Z-score is determined by the level of confidence you want to have in your results. For example, a Z-score of 1.96 corresponds to a 95% confidence level.

- Standard deviation: The standard deviation measures the variability of the data in your sample.

- Margin of error: The margin of error is the maximum amount of error you are willing to tolerate in your results.

To use this formula, you must determine the appropriate values for each variable based on your specific situation. In general, the higher the confidence level you want to have in your results, the larger the sample size you’ll need.

There are also online A/B testing calculators and tools available to help you calculate the appropriate sample size for your A/B test. Some popular tools include Optimizely’s sample size calculator, AB Testguide’s calculator, and Evan Miller’s A/B Test Sample Size Calculator.

Remember, it’s important to ensure that your sample size is large enough to produce statistically significant results but not so large that it becomes impractical or expensive to conduct the test.

How to Analyze A/B Test Results

Your A/B test will either succeed or fail, or the results will be inconclusive. Either way, you must analyze results and ensure reliable data. Here are the steps involved in analyzing A/B test results:

Identify the key metrics

Before you begin analyzing your A/B test results, it’s important to identify the key metrics you will use to measure the success of your test. These might include conversion rates, click-through rates, bounce rates, or any other relevant metrics.

Check to see if the test reached statistical significance

When running an A/B test, the level of statistical significance you aim for can vary depending on your specific goals and the size of your sample. However, a common threshold for statistical significance is 90%, which means that there is a 90% probability that the observed differences in the test results are not due to random chance.

Reaching a 90% statistical significance is important because it indicates a high level of confidence in the validity of your test results. It means that the differences you observed between your variations are likely to be due to the changes you made to your website or application rather than random chance.

If your test results do not reach 90% statistical significance, it means that there is still a chance that the differences you observed were due to random chance. In this case, making changes based on these results is not advisable, as the changes may not lead to the outcomes you’re hoping for.

It’s important to note that statistical significance is just one factor to consider when analyzing A/B test results. You should also consider other factors, such as the size of the difference between your variations and the practical significance of the results. By carefully considering all of these factors, you can make informed decisions about the changes you want to make to your website or application.

Analyze the results

Once you have determined the statistical significance of your A/B test results, you can begin analyzing the data to identify the reasons for the differences between the variations. Look for patterns and trends in the data, and consider factors such as user behavior, demographics, and other variables that may have impacted the results.

Draw conclusions and take action.

Based on your analysis of the A/B test results, draw conclusions about what worked and what didn’t, and use this information to make changes to your website or application. Take action based on your conclusions, and continue testing and optimizing to improve your results over time.

Deadly A/B Testing Mistakes You Should Avoid

When done right, A/B testing can positively impact ROI. On the other hand, it can negatively impact conversions when done wrong. Here are some of the A/B testing mistakes to avoid:

1. Not having a clear hypothesis

It’s important to have a clear hypothesis about what you want to test and why. Without a clear hypothesis, you may end up making random changes to your website or application that don’t lead to any meaningful improvements.

2. Testing too many variables at once

Testing too many variables at once can make it difficult to determine which change is responsible for any observed differences between test groups. It’s best to test one variable at a time to isolate the effects of each change.

3. Not collecting enough data

It’s important to collect enough data to ensure that your test results are statistically significant. You may make decisions based on incomplete or inaccurate information if you don’t collect enough data.

4. Ignoring the context of your test

The context of your test can significantly impact the results. For example, a change that works well for one audience may not work well for another audience. It’s important to consider the context of your test and tailor your changes accordingly.

5. Making decisions based on inconclusive results

It’s important to wait until your test results are statistically significant before making any decisions based on them. Making decisions based on inconclusive results can lead to poor decisions and wasted resources.

6. Not following best practices for testing

There are many best practices for A/B testing, such as testing on a small portion of your traffic initially, testing for a sufficient length of time, and testing during periods of stable traffic. Not following these best practices can lead to inaccurate or unreliable results.

7. Not testing long enough

A general rule of thumb is to run tests for at least two weeks to ensure that any observed differences between test groups are not due to random chance. Running tests for too short a period can lead to inconclusive or misleading results. For example, if a test is run for only a few days and the results show a significant difference between test groups, it may be tempting to conclude that the tested variable had a significant impact on the outcome.

Conclusion

To sum up this A/B testing guide, it’s important to emphasize that an AB test is a powerful tool for improving your product or marketing campaigns. By testing different versions of something and measuring the outcomes, you can make data-driven decisions about how to optimize for better results. To get started with A/B testing, you should have a clear goal, create two versions to test, randomly assign users, run the test for a long enough time, and use statistical analysis to determine the winner.

With these steps in mind, you can use A/B testing to continuously improve your product and drive better outcomes for your business. Remember to approach A/B testing with a commitment to data-driven decision-making and a willingness to iterate and improve based on the results.

A/B Testing Frequently Asked Questions

What is A/B testing?

A/B testing is a method of comparing two versions of something (e.g., a website, an email, an ad) to see which one performs better. It involves randomly assigning users to one of the versions and measuring the outcomes.

Why is A/B testing important?

A/B testing allows you to make data-driven decisions about how to improve your product or marketing campaigns. By testing different versions of something, you can identify what works and what doesn’t and optimize for better results.

What are some things you can A/B test?

You can A/B test pretty much anything, as long as you have a clear goal and a way to measure success. Common things to test include website design, email subject lines, ad copy, pricing, and product features.

How do you set up an A/B test?

To set up an A/B test, you need to create two versions of the thing you want to test (e.g., two different email subject lines). You then randomly assign users to one of the versions and measure the outcomes (e.g., open rates, click-through rates). You can use an A/B testing tool to help you set up and run the test.

How long should you run an A/B test?

The length of an A/B test depends on several factors, including the size of your audience, the magnitude of the difference you’re trying to detect, and the level of statistical significance you want to achieve. As a general rule of thumb, you should aim to run your test for at least a week to capture any weekly patterns in user behavior.

How do you determine the winner of an A/B test?

To determine the winner of an A/B test, you need to compare the outcomes of the two versions and see which one performed better. You can use statistical analysis to determine whether the difference between the two versions is significant enough to be meaningful.

What do you do after an A/B test?

After an A/B test, you should analyze the results and use them to inform your next steps. If one version performed significantly better than the other, you should consider implementing the winning version. If the difference is not significant, you may need to run further tests or make other changes to improve your results.

What are some common mistakes to avoid in A/B testing?

Common mistakes in A/B testing include not having a clear hypothesis, not testing for long enough, not segmenting your audience properly, and not analyzing the results rigorously. It’s important to approach A/B testing with a clear plan and a commitment to data-driven decision-making.